Fate has not been kind to TikTok in US lately, with tantrums of a legal battle between political turns and the suffering caused by billion-dollar bidding processes on a daily basis. One day it is banned, and the next day it is back with presidential blessings. Here it comes again, several of the high-profile investors take a stab at delving their fingers into the viral platform, the question remains about who is going to own a platform that creates trends, fuels influencer careers and keeps millions scrolling at 2 AM? As the battle heats up over TikTok, let’s break down what’s been going on so far and who has eyes on buying it.

TikTok’s Ongoing Controversy:

The last four years have been a storm of discontent for TikTok, which has had to crawl down in the U.S with a strong debate. The site is owned by a Chinese company, ByteDance, and this has raised worries in this country that the Chinese government might gain access to its users’ data. This has led to many legal actions, executive orders, and now, perhaps, the possible forced sale of TikTok’s U.S operations. Adding to the uncertain atmosphere, TikTok suffered a minor outage in the U.S last month leaving millions of users in suspense. The app was restored very quickly but reminded users about how fragile its hold exists in the country.

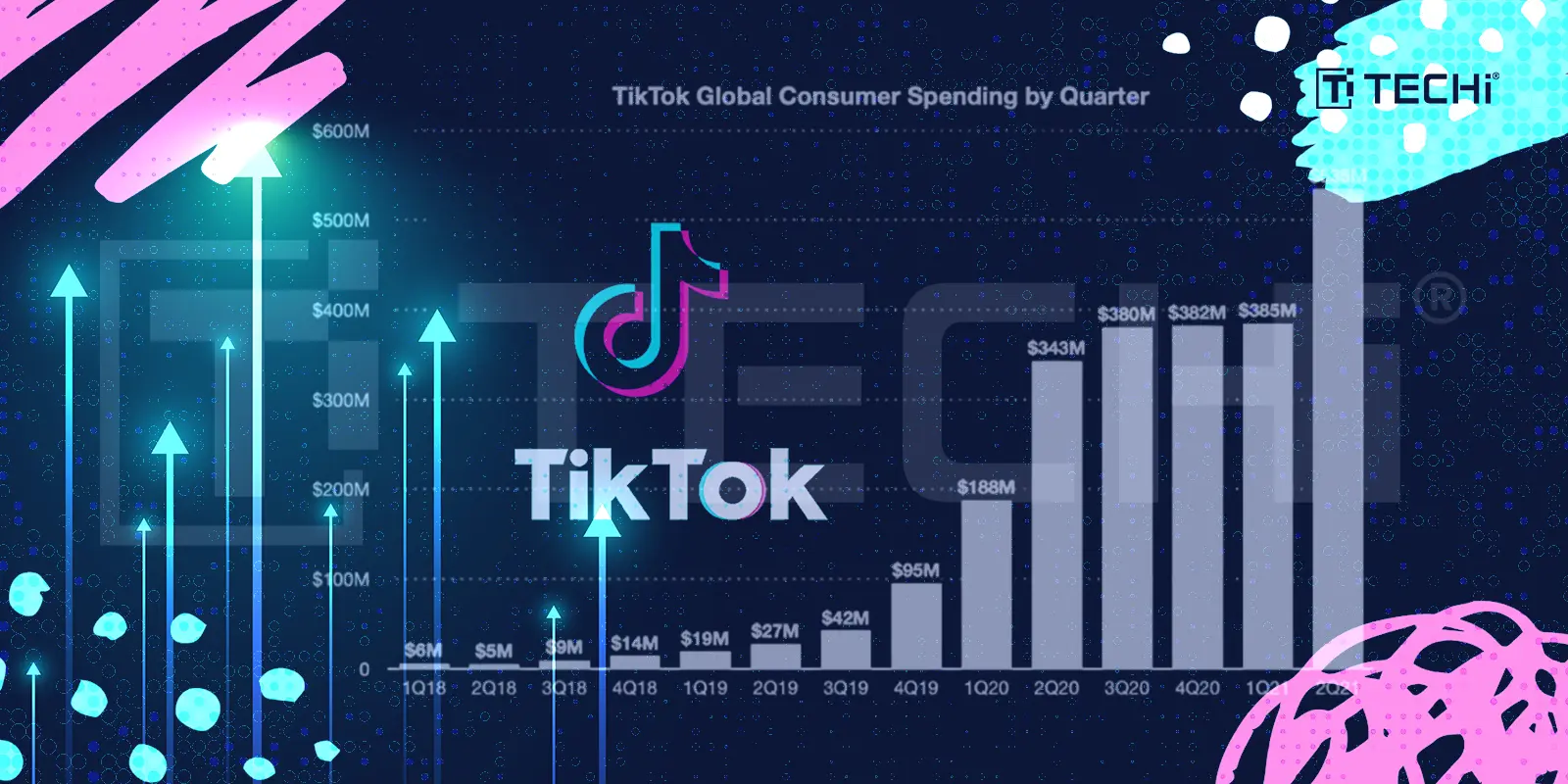

According to CFRA Research, Senior Vice President Angelo Zino, the value of TikTok’s U.S business could grow beyond $60 billion, with the demand from the U.S government to sell or ban TikTok. There have been several buyers emerging to snatch this most effective social media platform in the world.

TikTok’s Ban:

To make sense of that very uncertain future now with TikTok, we must revisit critical events in history that have forged its bumpy relationship with the U.S government. In August of 2020, President Donald Trump signed an executive order prohibiting transactions with ByteDance, virtually seeking to ban the app from usage within the U.S. The administration pushed for a forced sale of TikTok’s U.S operations, with Microsoft, Oracle, and Walmart lining up as potential buyers. A U.S judge temporarily blocked Trump’s executive order, allowing TikTok to continue its operations while legal proceedings were taking place.

Bipartisan efforts to address National Security concerns related to TikTok continued under former President Joe Biden. In April 2024, the U.S House of Representatives passed legislation that directly targeted TikTok, it later made its way through the Senate. President Biden signed the bill into law, making mandatory that TikTok either sell itself or be prohibited in the country.

In return, TikTok sued the U.S government, claiming that the ban was unconstitutional and a violation of First Amendment rights. The U.S Supreme Court upheld the Protecting Americans from Foreign Adversary Controlled Applications Act (PAFACA), popularly named as “the TikTok ban.

Trump’s Reversal and Temporary Extension:

Astonishingly, Trump submitted a court statement countering the expected ban on TikTok, suggesting that he wanted to find a way to keep TikTok in the U.S. In the aftermath of the Supreme Court’s ruling, TikTok swiftly shut down for a brief period in the U.S and it made its comeback within another less than 12-hour period with a statement that, “As a result of President Trump’s efforts, TikTok is back in the U.S”.

On January 20, Trump signed an executive order delaying the ban for 75 days, which will give TikTok additional time to sell a stake or sign some kind of agreement. Trump has proposed a 50-50 ownership deal between ByteDance and a U.S company, although nothing has been finalized yet.

Potential Buyers for TikTok in US:

Clearly, some potential investors and companies have emerged as prospective buyers of TikTok’s U.S operations. Here is who is contesting for the ownership;

1. The People’s Bid for TikTok:

Organized by Project Liberty founder Frank McCourt, The People’s Bid seeks to promote open-source initiatives that privilege privacy and data control. Kevin O’Leary, an investor and television personality, joined The People’s Bid on 6th January and has previously signaled interest in buying TikTok for $20 billion. Tim Berners-Lee, Prolific inventor of the World Wide Web said in a statement that, “users should have an ability to control their own data”, is also interested in bidding for it along with David Clark, a senior research scientist at MIT Computer Science and Artificial Intelligence Laboratory.

2. American Investor Consortium:

Headed by Jesse Tinsley, CEO of Employer.com, this consortium recently issued an all-cash $30 billion bid for TikTok U.S operation and the participants include: David Baszucki, Co-founder and CEO of Roblox, Nathan McCauley, CEO of crypto platform Anchorage Digital, and Jimmy Donaldson (MrBeast), the famous YouTube content creator.

3. Other Interested Buyers:

Other interested buyers are; Bobby Kotick, Former Activision CEO who is likely looking at how he can integrate TikTok into gaming. Steven Mnuchin, has come back into the discussion after being the U.S Treasury Secretary under Trump. Oracle has tried to acquire TikTok in 2020, but co-founder Larry Ellison is said to still have an interest. Walmart expressed interest in 2020 and it could have value in TikTok’s e-commerce potential. Microsoft, formerly a top player in 2020 who renewed interest according to reports. Rumble, the alternative video-sharing platform, which wants to purchase TikTok and be its cloud technology partner with it and Perplexity AI also submitted its bid last month.

TikTok’s Future in US:

The future of TikTok in the U.S will soon be determined by the months coming ahead as to whether the app will continue with new ownership or face another legal battle. The outcome might reshape how social media is being used by millions of creators and businesses and will be setting an example on how the U.S would treat digitally owned foreign platforms. What is for sure is that TikTok will shape not only the future of social media but also the broader conversation regarding data privacy, tech regulations, and digital influence.

Read More: TikTok Returns to App Stores in the US